DataWritingCommand Logical Commands¶

DataWritingCommand is an extension of the UnaryCommand abstraction for logical commands that write the result of a query (query data) to a relation (when executed).

Contract¶

Output Column Names¶

outputColumnNames: Seq[String]

The names of the output columns of the analyzed input query plan

Used when:

DataWritingCommandis requested for the output columns

Query¶

query: LogicalPlan

The analyzed LogicalPlan representing the data to write (i.e. whose result will be inserted into a relation)

Used when:

- BasicOperators execution planning strategy is executed

DataWritingCommandis requested for the child logical operator and the output columns

Executing Command¶

run(

sparkSession: SparkSession,

child: SparkPlan): Seq[Row]

Used when:

CreateHiveTableAsSelectBaseis requested torun- DataWritingCommandExec physical operator is requested for the sideEffectResult

Implementations¶

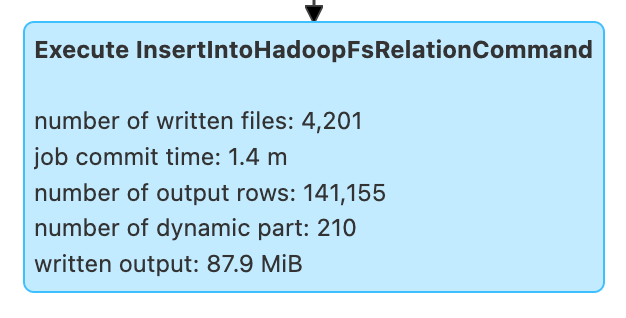

Performance Metrics¶

job commit time¶

number of dynamic part¶

Number of partitions (when processing write job statistics)

Corresponds to the number of times when newPartition of BasicWriteTaskStatsTracker was called (that is to announce the fact that a new partition is about to be written)

number of output rows¶

number of written files¶

task commit time¶

written output¶

Execution Planning¶

DataWritingCommand is resolved to a DataWritingCommandExec physical operator by BasicOperators execution planning strategy.

BasicWriteJobStatsTracker¶

basicWriteJobStatsTracker(

hadoopConf: Configuration): BasicWriteJobStatsTracker // (1)!

basicWriteJobStatsTracker(

metrics: Map[String, SQLMetric],

hadoopConf: Configuration): BasicWriteJobStatsTracker

- Uses the metrics

basicWriteJobStatsTracker creates a new BasicWriteJobStatsTracker (with the given Hadoop Configuration and the metrics).

basicWriteJobStatsTracker is used when:

FileFormatWriteris requested to write data out- InsertIntoHadoopFsRelationCommand logical command is executed

- SaveAsHiveFile logical command is executed (and requested to saveAsHiveFile)