HiveExternalCatalog¶

HiveExternalCatalog is an ExternalCatalog for SparkSession with Hive support enabled.

HiveExternalCatalog uses an HiveClient to interact with a Hive metastore.

Creating Instance¶

HiveExternalCatalog takes the following to be created:

-

SparkConf(Spark Core) -

Configuration(Apache Hadoop)

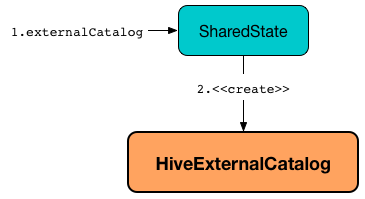

HiveExternalCatalog is created when:

SharedStateis requested for the ExternalCatalog (and spark.sql.catalogImplementation ishive).

restoreTableMetadata¶

restoreTableMetadata(

inputTable: CatalogTable): CatalogTable

restoreTableMetadata...FIXME

restoreTableMetadata is used when:

HiveExternalCatalogis requested for table metadata, the metadata of tables and listPartitionsByFilter

restoreHiveSerdeTable¶

restoreHiveSerdeTable(

table: CatalogTable): CatalogTable

restoreHiveSerdeTable...FIXME

restoreDataSourceTable¶

restoreDataSourceTable(

table: CatalogTable,

provider: String): CatalogTable

restoreDataSourceTable...FIXME

Looking Up BucketSpec in Table Properties¶

getBucketSpecFromTableProperties(

metadata: CatalogTable): Option[BucketSpec]

getBucketSpecFromTableProperties looks up the value of spark.sql.sources.schema.numBuckets property (among the properties) in the given CatalogTable metadata.

If found, getBucketSpecFromTableProperties creates a BucketSpec with the following:

| BucketSpec | Metadata Property |

|---|---|

| numBuckets | spark.sql.sources.schema.numBuckets |

| bucketColumnNames | spark.sql.sources.schema.numBucketColsspark.sql.sources.schema.bucketCol.N |

| sortColumnNames | spark.sql.sources.schema.numSortColsspark.sql.sources.schema.sortCol.N |

restorePartitionMetadata¶

restorePartitionMetadata(

partition: CatalogTablePartition,

table: CatalogTable): CatalogTablePartition

restorePartitionMetadata...FIXME

restorePartitionMetadata is used when:

HiveExternalCatalogis requested to getPartition and getPartitionOption

Restoring Table Statistics from Table Properties (from Hive Metastore)¶

statsFromProperties(

properties: Map[String, String],

table: String): Option[CatalogStatistics]

statsFromProperties collects statistics-related spark.sql.statistics-prefixed properties.

For no keys with the prefix, statsFromProperties returns None.

If there are keys with spark.sql.statistics prefix, statsFromProperties creates a CatalogColumnStat for every column in the schema.

For every column name in schema, statsFromProperties collects all the keys that start with spark.sql.statistics.colStats.[name] prefix (after having checked that the key spark.sql.statistics.colStats.[name].version exists that is a marker that the column statistics exist in the statistics properties) and converts them to a ColumnStat (for the column name).

In the end, statsFromProperties creates a CatalogStatistics as follows:

| Catalog Statistic | Value |

|---|---|

| sizeInBytes | spark.sql.statistics.totalSize |

| rowCount | spark.sql.statistics.numRows property |

| colStats | Column Names and their CatalogColumnStats |

statsFromProperties is used when:

HiveExternalCatalogis requested to restore metadata of a table or a partition

Demo¶

import org.apache.spark.sql.internal.StaticSQLConf

val catalogType = spark.conf.get(StaticSQLConf.CATALOG_IMPLEMENTATION.key)

assert(catalogType == "hive")

assert(spark.sessionState.conf.getConf(StaticSQLConf.CATALOG_IMPLEMENTATION) == "hive")

assert(spark.conf.get("spark.sql.catalogImplementation") == "hive")

val metastore = spark.sharedState.externalCatalog

import org.apache.spark.sql.catalyst.catalog.ExternalCatalog

assert(metastore.isInstanceOf[ExternalCatalog])

scala> println(metastore)

org.apache.spark.sql.catalyst.catalog.ExternalCatalogWithListener@277ffb17

scala> println(metastore.unwrapped)

org.apache.spark.sql.hive.HiveExternalCatalog@4eda3af9

Logging¶

Enable ALL logging level for org.apache.spark.sql.hive.HiveExternalCatalog logger to see what happens inside.

Add the following line to conf/log4j2.properties:

logger.HiveExternalCatalog.name = org.apache.spark.sql.hive.HiveExternalCatalog

logger.HiveExternalCatalog.level = all

Refer to Logging.