DiskStore¶

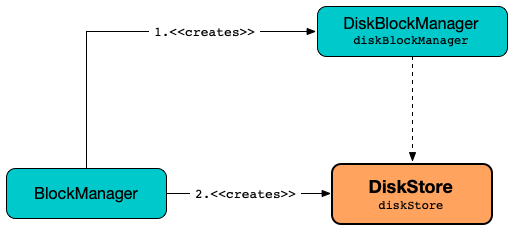

DiskStore manages data blocks on disk for BlockManager.

Creating Instance¶

DiskStore takes the following to be created:

- SparkConf

- DiskBlockManager

-

SecurityManager

DiskStore is created for BlockManager.

Block Sizes¶

blockSizes: ConcurrentHashMap[BlockId, Long]

DiskStore uses ConcurrentHashMap (Java) as a registry of blocks and the data size (on disk).

A new entry is added when put and moveFileToBlock.

An entry is removed when remove.

putBytes¶

putBytes(

blockId: BlockId,

bytes: ChunkedByteBuffer): Unit

putBytes put the block and writes the buffer out (to the given channel).

putBytes is used when:

ByteBufferBlockStoreUpdateris requested to saveToDiskStoreBlockManageris requested to dropFromMemory

getBytes¶

getBytes(

blockId: BlockId): BlockData

getBytes(

f: File,

blockSize: Long): BlockData

getBytes requests the DiskBlockManager for the block file and the size.

getBytes requests the SecurityManager for getIOEncryptionKey and returns a EncryptedBlockData if available or a DiskBlockData otherwise.

getBytes is used when:

TempFileBasedBlockStoreUpdateris requested to blockDataBlockManageris requested to getLocalValues, doGetLocalBytes

getSize¶

getSize(

blockId: BlockId): Long

getSize looks up the block in the blockSizes registry.

getSize is used when:

BlockManageris requested to getStatus, getCurrentBlockStatus, doPutIteratorDiskStoreis requested for the block bytes

moveFileToBlock¶

moveFileToBlock(

sourceFile: File,

blockSize: Long,

targetBlockId: BlockId): Unit

moveFileToBlock...FIXME

moveFileToBlock is used when:

TempFileBasedBlockStoreUpdateris requested to saveToDiskStore

Checking if Block File Exists¶

contains(

blockId: BlockId): Boolean

contains requests the DiskBlockManager for the block file and checks whether the file actually exists or not.

contains is used when:

BlockManageris requested to getStatus, getCurrentBlockStatus, getLocalValues, doGetLocalBytes, dropFromMemoryDiskStoreis requested to put

Persisting Block to Disk¶

put(

blockId: BlockId)(

writeFunc: WritableByteChannel => Unit): Unit

put prints out the following DEBUG message to the logs:

Attempting to put block [blockId]

put requests the DiskBlockManager for the block file for the input BlockId.

put opens the block file for writing (wrapped into a CountingWritableChannel to count the bytes written). put executes the given writeFunc function (with the WritableByteChannel of the block file) and saves the bytes written (to the blockSizes registry).

In the end, put prints out the following DEBUG message to the logs:

Block [fileName] stored as [size] file on disk in [time] ms

In case of any exception, put deletes the block file.

put throws an IllegalStateException when the block is already stored:

Block [blockId] is already present in the disk store

put is used when:

BlockManageris requested to doPutIterator and dropFromMemoryDiskStoreis requested to putBytes

Removing Block¶

remove(

blockId: BlockId): Boolean

remove...FIXME

remove is used when:

BlockManageris requested to removeBlockInternalDiskStoreis requested to put (and anIOExceptionis thrown)

Logging¶

Enable ALL logging level for org.apache.spark.storage.DiskStore logger to see what happens inside.

Add the following line to conf/log4j.properties:

log4j.logger.org.apache.spark.storage.DiskStore=ALL

Refer to Logging.