ExecutorSource¶

ExecutorSource is a Source of Executors.

Creating Instance¶

ExecutorSource takes the following to be created:

- ThreadPoolExecutor

- Executor ID (unused)

- File System Schemes (to report based on spark.executor.metrics.fileSystemSchemes)

ExecutorSource is created when:

Executoris created

Name¶

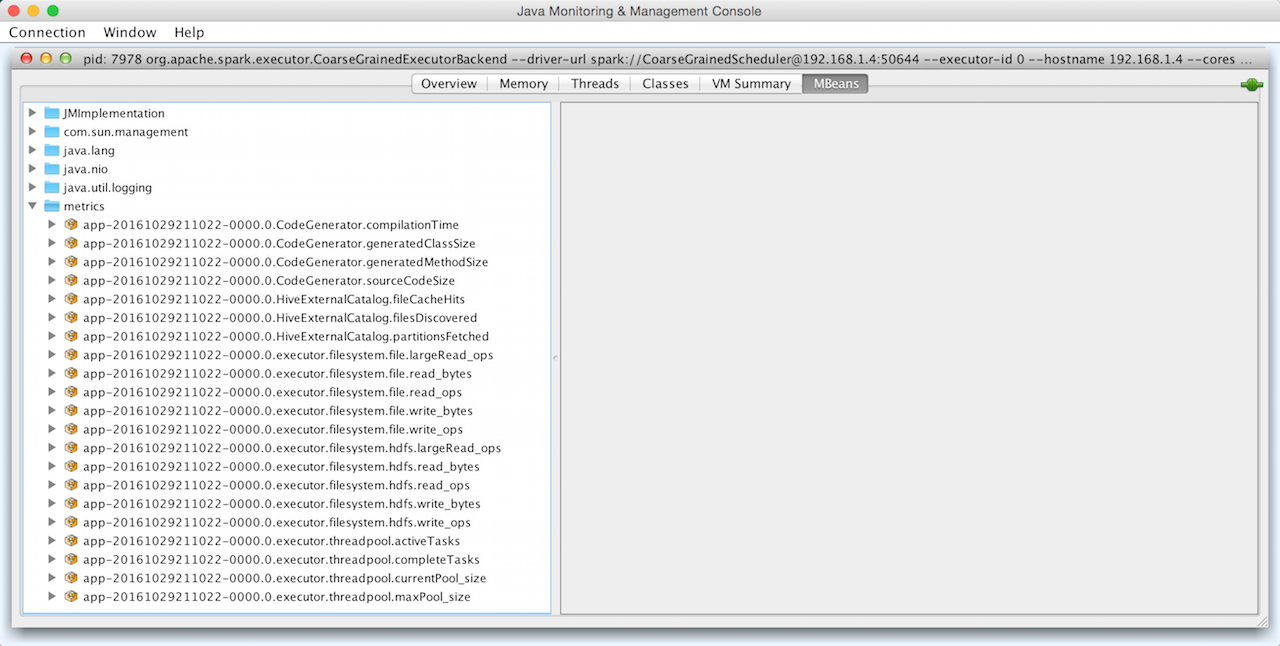

ExecutorSource is known under the name executor.

Metrics¶

metricRegistry: MetricRegistry

metricRegistry is part of the Source abstraction.

| Name | Description |

|---|---|

| threadpool.activeTasks | Approximate number of threads that are actively executing tasks (based on ThreadPoolExecutor.getActiveCount) |

| others |