Executor¶

Spark applications start one or more Executors for executing tasks.

By default (in Static Allocation of Executors) executors run for the entire lifetime of a Spark application (unlike in Dynamic Allocation).

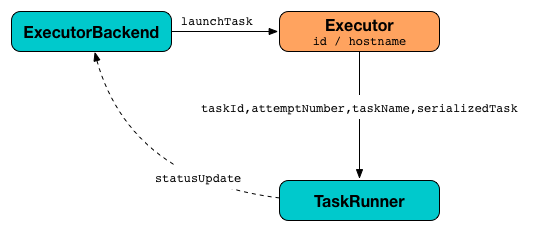

Executors are managed by ExecutorBackend.

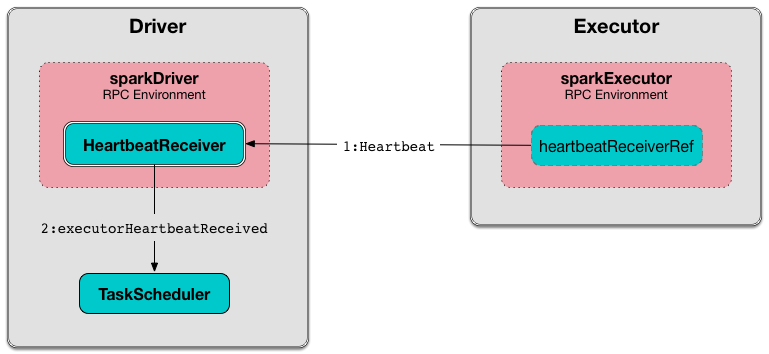

Executors reports heartbeat and partial metrics for active tasks to the HeartbeatReceiver RPC Endpoint on the driver.

Executors provide in-memory storage for RDDs that are cached in Spark applications (via BlockManager).

When started, an executor first registers itself with the driver that establishes a communication channel directly to the driver to accept tasks for execution.

Executor offers are described by executor id and the host on which an executor runs.

Executors can run multiple tasks over their lifetime, both in parallel and sequentially, and track running tasks.

Executors use an Executor task launch worker thread pool for launching tasks.

Executors send metrics (and heartbeats) using the Heartbeat Sender Thread.